Our Journey to WebAssembly Packs

Part of what makes Taskless powerful is that we can pull down code (we call them Packs) unique to the services you call. Stripe errors don't look like OpenAI errors, and getting a "400 bad request" means different things for different APIs. Recently, we changed how this entire system works, moving from Lua-based plugins to pure WebAssembly. Here's why we did it, what we learned, and how it's made Taskless better.

A Quick WebAssembly Primer

Before I dive into our migration story, let's talk about why WebAssembly is everywhere these days. It started back in 2014 as a way to run high-performance code in browsers, but it's grown into something much more interesting. Think of it as a universal binary format that's both fast and safe. That "write once, run anywhere" promise Java made in the 90s? WebAssembly actually delivers on it, but without needing a massive runtime.

This is why you're seeing WebAssembly pop up everywhere - browsers, serverless platforms, edge computing. Heck, even Microsoft Flight Simulator uses Wasm for plugins now. We're way past the hype phase and into "this actually solves real problems" territory.

Our Lua Problem

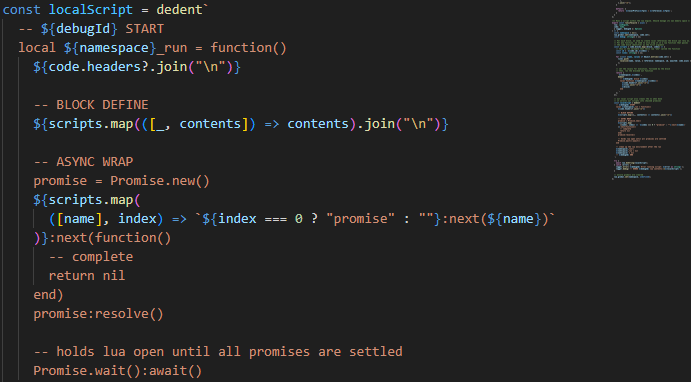

Initially, we ran all our plugins through a Lua VM on top of WebAssembly. At the time, this seemed clever. Lua is tiny, it's easy to embed, and the syntax is simple enough that most developers can read it without much training. Perfect for configuration-style code.

It was perfect until we started building our Ruby client. Lua has this interesting approach to versioning - they don't really care about backward compatibility. At all. Here's what I mean:

- Lua 5.2 doesn't have integers. Everything is a float. Yes, really.

- Lua 5.4 introduced the

localkeyword for proper variable scoping - If you write code for 5.4, it does not run on 5.2

This became a real problem because our Ruby client was going to use RLua (based on Lua 5.2) while our Node.js client used wasmoon (Lua 5.4). The same plugin would work in Node but break in Ruby.

The Pain of Defensive Lua

Want to see something really painful? Here's how you handle optional chaining in a modern language:

const errorType = response.error?.type;Simple, right? Here's what we had to write in Lua to get the same behavior:

function safe_get(table, ...)

if table == nil then return nil end

local current = table

for i, key in ipairs({...}) do

if type(current) ~= "table" then return nil end

current = current[key]

if current == nil then return nil end

end

return current

end

-- Usage

local error_type = safe_get(response, "error", "type")Each language "feature" looks like this. Plus, it'd all need to be rewritten for Lua 5.2. We didn't want to give up on Wasm though, as part of Taskless' strength was the plugins operated independent of the host language.

Making the Right Choice

My co-founder and I went to the BrowserTech SF's Wasm meetup and did what any sensible engineers would do - printed out questions and asked people smarter than us about our WebAssembly problem. We got one of two answers consistently:

- Write our own WebAssembly-friendly Lua implementation

- Bundle existing language runtimes into WebAssembly modules

The second option won out. My C scares people (including me), and the WebAssembly ecosystem has matured enough that we didn't need to reinvent this particular wheel. This led me to Extism, and honestly, their tagline should probably just be "Wasm toolchains that actually work."

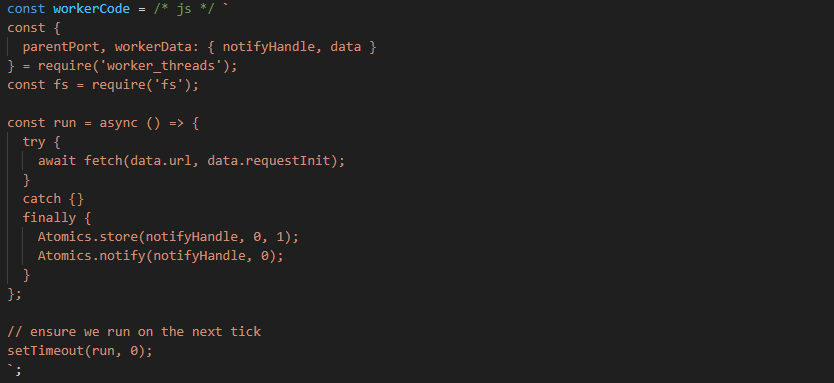

What makes Extism stand out is how it solves the two problems that make everyone hate working with WebAssembly: defining interfaces and handling data transfer between host and module. If you've ever tried to pass a string to a WebAssembly module, you know the pain - WebAssembly only knows about integers. Everything else requires building some kind of transport format.

Extism handles all of this, and better yet, it does it in ways that feel native to each language:

- TypeScript? Define your exports in

.d.tsfiles like you always do, with globals for message passing - Rust? Tack on a macro and it's strings in and out of the function

- Go? Add a comment (Go doesn't support macros and the community considers that a Very Good Thing) and then call

pdk.*for your integration methods

The end result? Plugin developers can work in whatever language they want without learning the intricacies of WebAssembly. No more worrying about memory management, no more building complex FFI layers, just write code that works.

The Migration

Here's the part that still surprises me - the actual migration took about six hours. Four hours learning Extism and building our implementation, two hours cleaning up our JavaScript client and optimizing things. The biggest change was structural: instead of requiring Lua code, plugins now just need to implement pre and post methods. That's it.

We didn't want to make radical changes to the Pack schema, so right now we're including the Wasm files as part of the pack configuration. In the near future, we'll serve the Wasm files from the edge, close to your application.

Real Benefits in Production

Let's talk about what this means in practice. Take Stripe's error responses - they're packed with useful information that usually gets lost in traditional APM and exception logging tools:

error.typetells you exactly what kind of error you hitrequest_log_urllinks directly to the relevant section of your Stripe workbenchdoc_urlpoints you to the docs page with troubleshooting steps for your specific errorcodegives you the exact error enum from Stripe instead of a generic4xxerror

Before, writing this handler would require careful Lua code for the edge cases, existential get operations and a lot of conditional checks to avoid a panic. Now, we've got optional chaining and pattern matching in a half dozen languages, any of which are better suited to the task. It's not just less code - it's safer, clearer, and easier to maintain.

The Trade-offs

Everything has trade-offs, and we found ours in binary size. Here's how our plugins typically shake out:

- Rust plugins: ~200KB

- Go plugins: ~400KB

- TypeScript/JavaScript plugins: ~800KB

That last number comes from QuickJS, which Extism uses for JavaScript support. It's bigger than we'd like, but the flexibility is worth it. And if we ever need to optimize a specific plugin, we can just rewrite it in Rust with no impact to Taskless users beyond a smaller file size.

What's Next

Well, for starters, our Open Source Loader now uses Wasm for its core Pack, making it easier to add error message capture as part of the post-request lifecycle.

export function pre() {

const input = JSON.parse(Host.inputString()) as PreInput;

const output: PreOutput = {

capture: {

// ... captured data

},

context: {

start: Date.now(),

},

};

Host.outputString(JSON.stringify(output));

}

export function post() {

const input = JSON.parse(Host.inputString()) as PostInput;

let error: string | undefined;

if (input.response?.status && input.response.status >= 400) {

error =

input.response.body?.error ??

input.response.body?.message ??

input.response.body?.err?.type;

}

const output: PostOutput = {

capture: {

durationMs: Date.now() - input.context.start,

...(input.response?.status ? { status: input.response.status } : {}),

...(error ? { error } : {}),

},

};

Host.outputString(JSON.stringify(output));

}And we’re excited the WebAssembly ecosystem keeps getting better. We're particularly looking forward to:

- The Component Model, which will make cross-language code sharing even easier

- WASI improvements for more sophisticated plugin capabilities including i/o while maintaining the zero-trust sandbox

- Growing language support giving developers more options for rolling their own Packs on Taskless

Moving Forward

This migration did more than just clean up our codebase - it fundamentally changed how we think about the idea of building our Packs. Shifting more of our logic into the Wasm layer means the clients get thinner, and thinner clients are easier to maintain.

The WebAssembly ecosystem has grown up. We're past the "wouldn't it be cool if..." phase and into solving real problems. For us at Taskless, that means we can focus on what matters: helping developers understand and manage their third-party dependencies without getting bogged down in implementation details.